75% of the web won’t take advantage of a faster experience in Google Chrome

Thoughts about Brotli over TLS

About 3 min reading time

Weird title, right? Google has been working for a faster web for a while, new APIs, new Chrome features, new protocols (SPDY to HTTP/2) and Page Speed Insights. This week, a new compression algorithm has appeared but without it won’t be available to three quarters of the web.

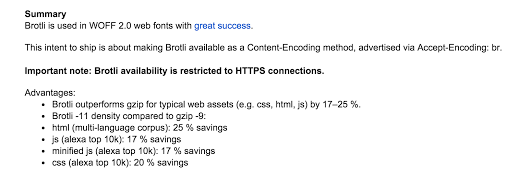

Google recently created the new open source compression algorithm Brotli that can reduce text-based files size, such as HTML, CSS and JavaScript files by up to 25% compared to gzip or deflate. A few days ago, Ilya Grigorik has announced that Chrome will support Brotli for file transfers from the server to Chrome by using the value br for the Content-Encoding header; Firefox has already begun some testing with Brotli also.

Therefore, Chrome (from version 49 or 50) will expose in the HTTP request Brotli support and if you update your server, instead of using gzip or deflate, it will deliver an smaller version of the same file compressed with Brotli, resulting in a faster web page load and reducing user’s data traffic. Great news for all of us working hard to make a faster web, right?.

But wait a minute. The post says that Chrome will support Brotli only over TLS/HTTPS connections. Why?

With great power there must also come — great responsibility! #

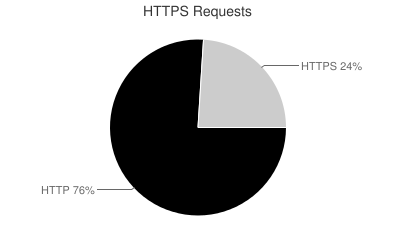

Google has today great power in the web community. According to StatCounter, 54% of current web traffic goes to Google Chrome. But according to HTTP Archive, only 24% of the traffic is being served using HTTPS.

Why is Google not letting 75% of the web to take advantage of faster page loads and less data traffic? Google has the power to do it, but is it justified? This article was original pointing to Google for not doing it. After a couple of hours, I’ve got several responses giving me the technical reason to do it. However, that technical reason is never explained when talking about this and I didn’t see any data yet to support that reason.

Moving to HTTPS #

There is a campaign from some part web community (including Google) to move the whole web to HTTPS. While I’m not entirely sure everyone is aware of it, I understand its purpose: security and privacy. We want to avoid man-in-the-middle attacks and make a safer web.

I understand why new APIs, such as Bluetooth, Service Workers and Web Push Notifications work only over HTTPS. I can’t even understand (but not agree yet) on why Chrome will deprecate support for Geolocation API from insecure HTTP in the near future. We don’t want an attacker to use a website to inject malicious JavaScript code. I agree with this.

However, a compression algorithm that can potentially help web performance and save users’ money reducing data transfer is something different.

At first, I couldn’t find any technical or privacy reason to hinder access to a better compression algorithm. Update: thanks to this article, Ilya confirmed that the official answer is “HTTPS restriction is to guard against proxies mangling your content.”

The idea is that there are some proxies out there (how many?) not following the HTTP protocol in the right way and they try to decompress a response with gzip even if you say it’s a different compression method. So your content won’t be delivered to people passing through these old proxies that can be up to “20% of the traffic for certain segments of the population” according to Ilya Grigorik, Google Web Performance Engineer and Author of High Performance Browser Networking. More about this problem here.

I’ve asked for data to support this justification. Is the percentage of traffic going through these fossil proxies good enough to not let the rest of the people get an advantage of 25% on traffic and page load? I don’t know.

Same critics applies to Firefox, where Brotli tests are working today only over TLS. Nobody there explains why neither.

It doesn’t feel right #

It doesn’t feel right anyway. If the proxy problem is big enough as Google said after this article, can we get some data and reports supporting it? The web community deserves to know the truth so we understand why we are doing what we are doing. Upgrading 75% of web servers to HTTPS doesn’t seem possible in a short period so this advantage (a faster web) will also be used by bigger vendors creating a first-class experience web not available to everybody else.

If there is no other solution, let’s spread the word on HTTPS; let’s create free SSL certificates. I don’t think the web community is understanding the move and I don’t see people moving towards TLS in mass. So at the end, it feels like something is not right on the web.

by Maximiliano Firtman

by Maximiliano Firtman